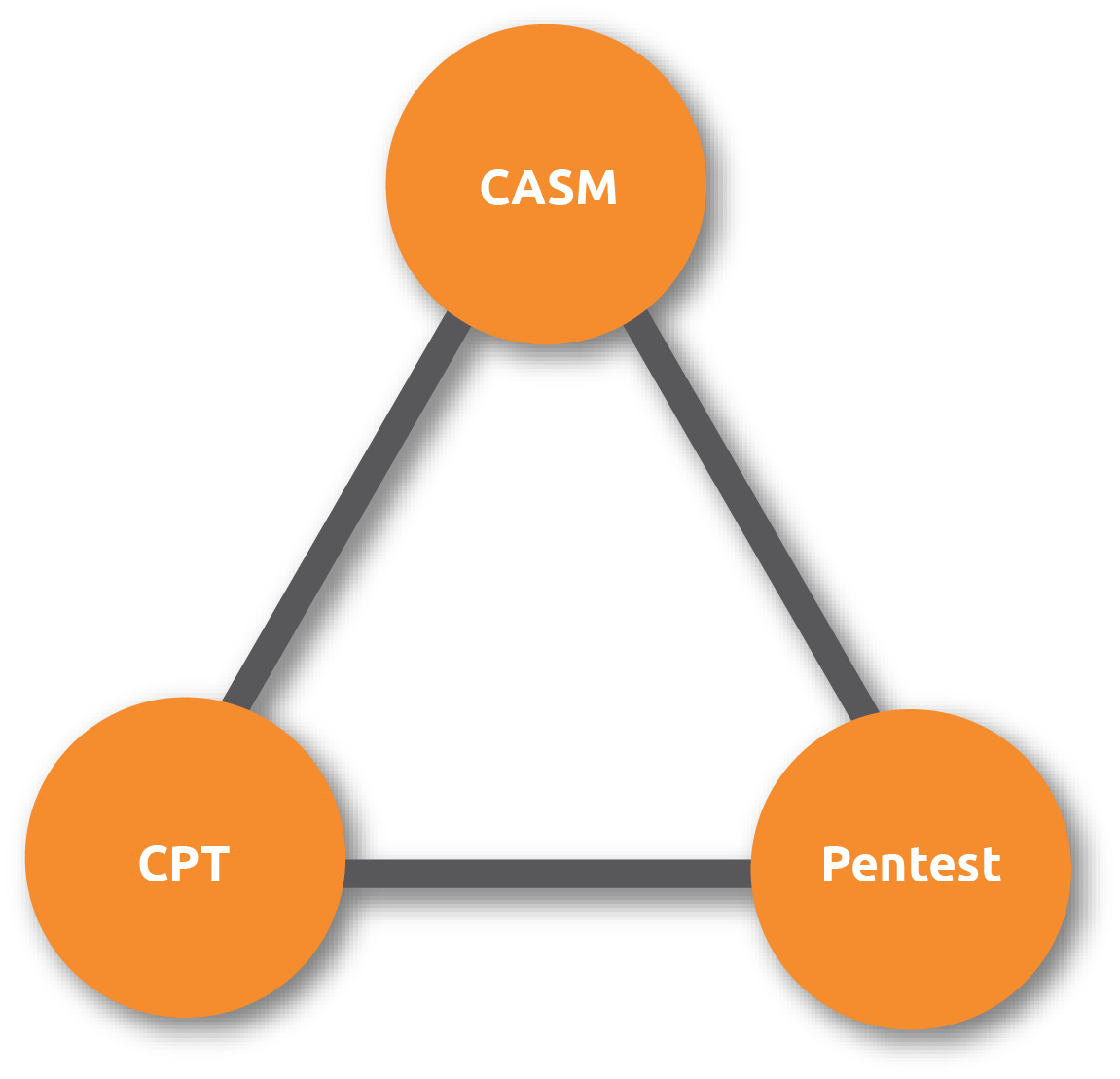

Penetration (pen) testing and continuous penetration testing allow the experts to stress test your security posture by proactively hunting for vulnerabilities and identifying potential issues. However, any pentest is only as good as the partner administering it. You need a partner that is thorough and ruthless so you can get the information you need to ensure your network is safe and secure.