The use of QR codes has grown exponentially in the last few years. So much so that the software for reading QR codes now comes as a default in the camera settings on most mobile devices. By just taking a photograph of a QR code the camera automatically brings up an option to open a link to access information.

The problem currently is that there is no way to verify if the link will take you to where it says it will, especially as most of the URL’s shown by QR readers display as short links. Humans can’t read the digital signature, and there’s no way to manually identify what information is contained in a QR code or where it’ll lead. For individuals and businesses this poses a security risk.

Consider how many QR codes exist in public places and how broadly they’re used in marketing. From parking garage tickets to restaurant menus, promotions and competitions in-store. Now consider that QR codes can easily be created by anyone with access to a QR creator app. Which means they can also be misused by anyone. It’s really not hard for someone to create and print a QR code to divert users to an alternate URL and place it over a genuine one on a restaurant menu.

What led to the rise in adoption of QR codes

QR codes were created in the mid 1990’s by a subsidiary of Toyota, Denzo Wave. The purpose of the QR code development was to be able to track car parts through manufacturing and assembly. However, the developers created it as an open code with the intention that it could be freely used by as many people as possible. Marketers saw the opportunity in the convenience it offered and soon it became a popular way to distribute coupons and other promotions.

When the pandemic hit and social distancing became a requirement, QR codes were seen as the ideal solution for many different applications. Instead of having to hand over cash or a credit card, a QR code could be scanned for payment. Instead of handing out menu’s, restaurants started offering access to menus through QR codes. In many ways the pandemic was largely responsible for the acceleration of QR code adoption. QR codes were seen as a “safer” no-contact solution. But in making things easier and more convenient for consumers, it’s created a minefield when it comes to security.

How do QR codes create vulnerabilities compared to email?

Over the years people have learnt not to click on just any link that comes through their email account. There are a few basic checks that can be done. These include independently verifying where the email came from. If the person or company is a known entity, as well as checking the destination URL of the link.

The problem with QR codes is that none of this information is available on looking at it. It’s just a pattern of black and white blocks. Even when bringing up the link, this is usually a short link so it’s not even possible to validate the URL. On email there are number of security options available including firewalls, anti-phishing and anti-virus software that can scan incoming emails and issue alerts. But nothing like this exists for QR codes.

Currently there is no software or system capable of scanning and automatically authenticating a QR code in the same way as an anti-virus would do for email. Without technology available to help with security, reducing vulnerabilities is reliant on education.

Best practices to reduce vulnerabilities:

As most QR codes are scanned with a mobile device, and most employees also access company emails and apps from their phones, there needs to be greater awareness of the risks that exist. Criminals are increasingly targeting mobile phones and individual identities in order to gain access to business systems. If an employee inadvertently clicks on a link from a QR code that is from a malicious source, it could set off a chain reaction. With access to the phone, it may also be possible to gain access to all the apps and systems on that phone – including company data.

From a user perspective, the key thing to know is that gaining access through a QR code requires manual input. The camera on a mobile phone may automatically scan a QR code when it sees it, but it still requires the user to manually click on the URL for anything to happen. That is the best opportunity to stop any vulnerability. Dismiss the link and there’s no risk. The QR code can’t automatically run a script or access the device if the link is ignored.

From a business perspective, if you’re using QR codes and want people to click on them you need to find ways to increase transparency and show where the link is sending them. The best way to do this is to avoid the use of short links. Show the actual URL, provide a way to validate that it’s a genuine promotion or link to your website.

QR code takeaway:

QR codes are in such broad circulation already, they’re not going away. But it’s a personal choice whether or not to use them. There’s nothing more personal in terms of technology than a mobile phone. If people want to improve their identity security there has to be a greater awareness of where the risks lie. Protecting devices, personal information and even access into company systems starts with a more discerning approach to QR codes.

Because the technology doesn’t currently exist to validate or authenticate QR codes, we need to learn how to use them in a safe way. We had to learn (often the hard way) not to insert just any memory card into a computer or open emails without scanning and validating them. Similarly, there needs to be a greater awareness to not scan just any QR code that’s presented.

To hear a more detailed discussion of QR codes and the security risks they pose, watch Episode 25 of IT Trendsetters Interview Series.

In boxing, the attributes that make up a champion are universally understood. A swift jab, a bone-crunching cross, and agile footwork are all important. However, true champions like Robinson, Ali, and Leonard knew how to defend against incoming attacks and avoid unnecessary punishment at all costs.

The same is true for a champion DNS security solution––it should help you avoid unnecessary breaches, protect against incoming attacks, and keep your data and systems safe at all costs. Your solution must not only be able to deliver content quickly and efficiently, but also protect against all types of incoming threats. It must be able to defend against DDoS attacks, data leaks, and new malicious offensive strategies.

When a boxing champion is able to avoid getting hit and can develop a shield against blows, they stay in the ring longer and are more likely to succeed. Again, the same is true for your business when it comes to developing a champion DNS security solution. Here are some of the features and attributes your DNS security solution should have:

A champion solution will increase the return on investment from your other security investments. It will also, without requiring additional effort from you or a third party, secure every connection over physical, virtual, and cloud infrastructure.

A champion solution provides comprehensive defense using the existing infrastructure that runs your business—including DNS and other core network services. BloxOne Threat Defense, for example, maximizes brand protection by securing your existing networks and digital imperatives like SD-WAN, IoT, and the cloud.

SOAR, (security orchestration, automation, and response) solutions help you work smarter by automating the investigation and response to security events. A champion DNS solution will integrate with your SOAR platform to help improve efficiency and effectiveness.

A champion solution will integrate easily into your current environment and scale seamlessly as your business grows. It should require no new hardware, or degrade the performance of any existing network services.

Most importantly, a champion DNS security solution must be able to defend against any potential incoming threats. It must be able to defend against DDoS attacks, data leaks, and other malicious threats

BloxOne Threat Defense is a comprehensive, cloud-native security solution that meets all of the criteria, and more. It offers industry-leading features such as DNS firewalling, DDoS protection, and data leak prevention. BloxOne Threat Defense is easily scalable, integrates seamlessly with existing SOAR solutions, and maximizes ROI from your other security investments.

Don't leave your business unprotected against the ever-evolving landscape of DNS threats. A good offensive recovery is useful, but an adaptable defensive strategy is what separates a true DNS security champion from the rest.

To learn more about how BloxOne Threat Defense can help you defend against incoming threats, contact us today and book a free trial today!

Consolidating data centers, increased business agility and reduced IT system costs are a few of the benefits associated with migrating to the cloud. Add to these improved security and it makes a compelling case for cloud migration. As part of the digital transformation process, companies may implement what they consider the best tools, and have the right people and policies in place to secure their working environment. But is it enough?

Technology is continually evolving and so are the ways in which cybercriminals attack. Which means that no system is entirely secure. Every small change or upgrade has the potential to create a vulnerability. In that way, operating in the cloud is not all that different to having on-site systems that need to be tested and defended.

Understanding the most common mistakes made in cloud security can help companies become more aware of where vulnerabilities exist. We highlight the top five we often come across when testing:

This is one of the most common issues that comes up as a vulnerability in cloud systems. Normally as part of any on-site data center change or upgrade, there would be a process of removing the unneeded services and applications, then checking and patching the system to ensure it’s the latest version to reduce the number of vulnerabilities. But when new systems are set up in the cloud, often some of these steps are skipped. It could simply be a case of them being exposed to other networks or the internet before they’re hardened. More often though, they’re simply overlooked, and this creates vulnerabilities.

Frequently vulnerabilities occur through remote desktop protocols, SSH, open files shares, database listeners, and missing ACL’s or firewalls. Usually these points of access would be shielded by a VPN, but now they’re being exposed to the internet. An example of how this could happen is through default accounts and passwords. If during setup these defaults weren’t removed or secured and SSH or databases are inadvertently exposed to the internet, it opens up a pathway for an attacker to access the system through the default logins and passwords.

While this is often seen in on-site systems, it is more prevalent in cloud systems. Perhaps because there seems to be less vigilance when migrating to the cloud. Weak authentication is a concern, and also easy authentication bypasses where an attacker is able to skip authentication altogether and start initiating queries to find vulnerabilities within a system.

Basic system controls such as firewalls, VPN, and two factor authentication need to be in place as a first line of defense. Many cloud servers have their own firewalls which are more than adequate, but they need to be activated and visible. Another common vulnerability can exist in a hybrid on-site cloud system that is connected by a S2S VPN. A vulnerability in the cloud system could give an attacker access to the on-site system through that supposedly secure link.

When a cloud server has been compromised, the first thing that is asked from the affected company, is the logs showing access, firewalls and possible threats to the different systems hosted within the cloud. If these logs don’t exist or haven’t been properly set up, it makes it almost impossible to monitor and identify where the attacks originated or how they progressed through the system.

While there is a big movement towards cloud servers, many companies don’t give the same level of consideration to securing their systems in the cloud as they have for years on their on-site servers. This is where penetration testing is hugely valuable in that it can identify and report on vulnerabilities and give companies an opportunity to reduce their risk.

The approach of penetration testing on cloud servers is no different from on-site servers because from an attacker’s point of view, they’re interested in what they can access. Where that information is located, makes no difference. They’re looking for vulnerabilities to exploit. There are some areas in the cloud where new vulnerabilities have been identified, such as sub-domain takeovers, open AWS S3 buckets, or even open file shares that give internet access to private networks. Authentication systems are also common targets. Penetration testing aims to make vulnerabilities known so that they can be corrected to reduce the risk a company is exposed to.

For companies that want to ensure they’re staying ahead of vulnerabilities, adversary simulations provide an opportunity to collaborate with penetration testers and validate their controls. The simulation process demonstrates likely or common attacks and gives defenders an opportunity to test their ability to identify and respond to the threats as they occur. This experience helps train responders and improve system controls. A huge benefit of this collaborative testing approach is sharing of information such as logs and alerts. The penetration tester can see what alerts are being triggered by their actions, while the defenders can see how attacks can evolve. If alerts aren’t being triggered, this identifies that logs aren’t being initiated which can then be corrected and retested.

As companies advance in their digital transformation and migrate more systems to the cloud, there needs to be an awareness that risk and vulnerabilities remain. The same level of vigilance taken with on-site systems needs to be implemented alongside cloud migrations. And then the systems need to be tested. If not attackers will gladly find and exploit vulnerabilities and this is not the type of risk companies want to be exposed to.

To learn about Cloud Penetration Testing and Cloud Adversary Simulation services reach out to Synercomm.

Having access to data on a network, whether it’s moving or static, is the key to operational efficiency and network security. This may seem obvious, yet the way many tech stacks are set up is to primarily support specific business processes. Network visibility only gets considered much later when there’s a problem.

For example: When there is a performance issue on a network, an application error or even a cybersecurity threat, getting access to data quickly is essential. But if visibility hasn’t been built into the design of the network, finding the right data becomes very difficult.

In a small organization, getting a crash card usually requires someone going to the tech stack and start running traces to find out where the issue originated. It’s a challenging task and takes time. Imagine the same scenario but with an enterprise with thousands of users. Without visibility into the network, how do you know where to start to troubleshoot? If the network and systems have been built without visibility, it becomes very difficult to access the data needed quickly.

There is a certain amount of consideration that needs to be given to system architecture to gain visibility to data and have monitoring systems in place that can provide early detection – whether it’s for a cybersecurity threat or network performance. This may include physical probes on a data center, virtual probes on a cloud network, changes to user agents or a combination of all of these.

Practically, to gain visibility into a data center, you may decide to install taps on the top of the rack as well as some aggregation devices that help you gain access to the north / south traffic on that rack. The curious thing is that most cyberattacks actually happen on east / west traffic. This means that monitoring only the top rack won’t be able to provide visibility or early detection on those threats. As a result you may need to plan to have additional virtual taps running in either your LINUX or vm ware environment which will provide a much broader level of monitoring of the infrastructure.

For most companies they also have cloud deployments, and these could go back 15 years, using any number of cloud systems for different workflows. The question to ask is: Does the company have the same level of data governance that it used to have on its own data center, as the data centers it no longer owns and just runs through an application? Most times the company won’t have access to that infrastructure. This means that a more measured approach is needed to determine how monitoring of all infrastructure can be achieved. Without a level of visibility it becomes very difficult to identify vulnerabilities and resolve them.

More than two years after pivoting infrastructure to enable employees to work from home, many issues relating to data governance and compliance are now showing. These further highlight the challenges that occur when visibility isn’t built into infrastructure design. In reality, the pivot had to happen rapidly to ensure business continuity. At the time, access was the priority and given the urgency it wasn’t possible to build in the required levels of security and visibility.

With hybrid working becoming the norm for many companies, the shift in infrastructure is no longer considered temporary. Companies have systems that span data centers, remote workers and the cloud and there are gaps when it comes to data governance and compliance. IT and cybersecurity teams are now testing levels of system performance and working to identify possible vulnerabilities to make networks and systems more secure.

There is an added challenge in that tech stacks have become highly complex with so many systems performing different functions in the company. This is especially true when you consider multilayered approaches to cybersecurity and how much infrastructure is cloud based. Previously, when companies owned all the systems in their data centers, there were a handful of ways to manage visibility and gain access to data. Today, with ownership diversified in the different systems, it’s very difficult to have the same level of data visibility.

As system engineers develop and implement more tools to improve application and network performance, the vision may be to be able to manage everything in one place and have access to all the data you need. But even with SD LAN, technology is not yet at a point where one system or tool can do everything.

For now the best approach is to look at all the different locations and get a baseline for performance. Then go back 30 or 60 days and see if that performance was better or worse. When new technology is implemented it becomes easier to identify where improvements have taken place and where vulnerabilities still exist.

Even with AI/ML applications, it comes back to data visibility. AI may have the capacity to generate actionable insights, but it still requires training and vast volumes of data to do so. Companies need to be able to find and access the right data within their highly complex systems to be able to run the AI applications affectively.

Traditionally, and especially with cloud applications the focus is usually on building first and then securing systems later. But an approach that focuses on how the company will access critical data as part of the design, helps build more robust systems and infrastructure. Data visibility is very domain specific and companies that want to stay ahead in terms of system performance and security are being more proactive about incorporating data visibility into systems design.

There’s no doubt that this complex topic will continue to evolve along with systems and applications. To hear a more in-depth discussion on the topic of data visibility, watch the recent IT Trendsetters podcast with Synercomm and Keysight.

When US based companies are expanding and setting up offices in foreign countries, or they’re already established but need to do a systems upgrade, there are two primary options available. The company might look to procure equipment locally in-country. Or they might approach their US supplier and then plan to export the equipment.

At first it may appear to be a simple matter of efficiency and cost. How to get the equipment on the ground at the best price and as quickly as possible. But both options are mired in complexity with hidden costs and risks that aren’t immediately obvious. It’s when companies make assumptions that they run into trouble. Even a small mistake can be very costly, impacting the business reputation and bottom line.

Being aware of common pitfalls when looking to deploy IT systems internationally, can help decision makers reduce risk and go about deployment in a way that benefits the company in both the short and long term. To highlight what factors need to be taken into consideration, we discuss some common assumptions and oversights that can land companies in trouble.

Initially when getting a quote from a local reseller, it may appear to be more cost effective compared to international shipping and customs clearance. However, it’s important to know if the purchase is subject to local direct or indirect taxes and if they have been included in the quote. For example: In some European countries VAT (Value Added Tax) is charged at 21% on all purchases. If this is not included and specified on the quote, companies could inadvertently find themselves paying 21% more than budgeted.

IT systems may require procurement from multiple local vendors and this can be a challenge when it comes to managing warranties, maintenance contracts and the assets. Even if the vendors are able to provide a maintenance service, the responsibility still rests with the company to ensure the assets are accurately tagged and added to the database. When breakdowns occur or equipment needs to be replaced, the company will need to have the information on hand to know how to go about that, and if they don’t, it can be problematic. With a central point of procurement, asset management can be much easier.

Operationally, dealing with suppliers and vendors in a foreign destination can be challenging. Without local knowledge and understanding of local cultures and how business operates within that culture, it’s easy to make mistakes. And those mistakes can be costly. For example: local vendors may have to bring in stock and this could result in delays. It may be difficult to hold the vendor to account, especially if they keep promising delivery, yet delays persist. Companies could be stuck in limbo, waiting for equipment. Installation teams are delayed and operational teams become frustrated. These types of delays can end up costing the company significantly more than what was originally budgeted for the deployment.

Some companies may decide to stick with who they know and buy from their usual US supplier with the view to ship the equipment to the destination using a courier or freight forwarder. The challenge comes in understanding international import and export regulations. Too often companies will simply tick the boxes that enable the goods to be shipped, even if it isn’t entirely accurate. There are many ways to ship goods that might get them to a destination, but only one correct way that ensures the shipment is compliant. Knowing and understanding import regulations, taxes and duties, including how they differ between countries is the only way to reduce risk and avoid penalties.

Even within regions, countries have different trade and tax regulations regarding how imports are categorized and processed through customs. On major IT deployments with many different equipment components this can become highly complex. The logistics of managing everything is equally complex and any mistakes have knock on effects. Keep in mind that the company usually has to work with what is deployed for a number of years. This makes the cost of getting a deployment wrong a major risk. If there’s non-compliance with trade and tax regulations it can result in stiff penalties that can set a company back financially. If logistics and installation go awry it can result in company downtime which has operational implications.

There’s value in having centralized management of international IT deployment. Especially when that centralized management incorporates overseeing trade and taxation compliance, procurement and asset management, as well as logistics and delivery. If at any stage of the deployment there are queries or concerns, there’s a central contact to hold accountable and get answers.

Initially the costs of managing deployment centrally may appear to be higher, but the value comes in with removing risk of non-compliance and reducing risk of delays and operational downtime. Plus having an up to date asset database makes it significantly easier to manage maintenance, warranties and breakdowns going forward.

Companies debating which deployment route is most efficient, need to consider the possible pitfalls and their abilities to manage them independently. If there’s any doubt, then a centrally managed solution should be a serious consideration.

To hear a more detailed discussion on these and other common deployment pitfalls listen to our recent podcast on IT Trendsetters. The podcast contains some valuable and enlightening discussion points that you may find helpful.

To read the FULL Whitepaper for free, fill out the form above and click download!

Every project begins by carefully listening to our customer's problems and asks. Through this process, we learn about their underlying priorities, objectives, and unique project requirements.

Instead of spending our time “selling” to our customers and prospects, we focus our energies on investing in the right solutions for our customers and letting our expertise, industry reputation, and excellent work speak for itself.

No matter how large or small your deployment is, you need to know that you can trust your logistics and IT partner to provide you with tailored solutions, sound advice, and trustworthy white-glove service. This whitepaper was created to help you learn more about the importance of trust in your IT partnerships.

Learn more about ImplementIt and how we can make your next project stress-free by clicking here.

While project managers possess a wide variety of skills, few have the extensive experience needed for handling the logistics of large, complex deployments and could benefit from expert advice and assistance.

In this whitepaper, we reveal how to empower your project managers to handle the logistics of large, complex deployments by adopting an IT-focused approach.

“Many companies claim to offer “white-glove service,” offering a rigid set of one-size-fits-all processes and procedures that allow them to check off items on a checklist. Our approach is different. We value our customer relationships immensely and think of our customers as part of the team. We are driven by a deeply seeded and pervasive culture that drives us to always do right by each and every customer. White-glove is more than a checklist; it’s a way of conducting business that governs every aspect of our company.”

Are you interested in how you can empower your project manager to tackle large-scale deployments with confidence? Fill out the form above to gain access to our full white paper.

SynerComm's Continuous Attack Surface Management (CASM Engine®) and Continuous Penetration Testing was named a top-five finalist in the Best Vulnerability Management Solution category for the 2022 SC Awards

SynerComm's CASM Engine® has been recognized as a Trust Award finalist in the Best Vulnerability Management Solution category for the 2022 SC Awards. Now in its 25th year, the SC Awards are cybersecurity's most prestigious and competitive program. Finalists are recognized for outstanding solutions, organizations, and people driving innovation and success in information security.

"We created the CASM Engine® with the goal of being completely hands-off, while still providing multiple user-types with the most detailed and accurate attack surface reporting available today. A single solution that solves your asset inventory and monitoring, vulnerability management, attack surface management, and reporting needs," said Kirk Hanratty, Vice President/CTO and Co-Founder of SynerComm. "We are proud that our solution has been recognized as a Trust Award finalist this year."

The 2022 SC Awards were the most competitive to date, with a record 800 entries received across 38 categories - a 21% increase over 2021. This year, SC Awards expanded its recognition program to include several new award categories that reflect the shifting dynamics and emerging industry trends. The new Trust Award categories recognize solutions in cloud, data security, managed detection and more.

"SynerComm and other Trust award finalists reflect astonishing levels of innovation across the information security industry, and underscore vendor resilience and responsiveness to a rapidly evolving threat landscape," said Jill Aitoro, Senior Vice President of Content Strategy at CyberRisk Alliance. "We are so proud to recognize leading products, people and companies through a trusted program that continues to attract both new entrants and industry mainstays that come back year after year."

Entries for the SC Awards were judged by a world-class panel of industry leaders from sectors including healthcare, financial services, manufacturing, consulting, and education, among others.

Whether doing security research or troubleshooting networks, network sniffers and packet analysis can be invaluable tools. If you're a network engineer like me, you've probably been holding onto your favorite 4 or 8-port 10/100 hub for 25 years now. The reason is that hubs (not switches) make great network taps. By design, all Ethernet transmissions on a hub are sent to all ports. To monitor another device, you can place it on a hub along with your laptop/sniffer and then connect that hub to the rest of your network (if needed). All packets sent to or from this device will also be sent to your sniffer on the hub. Even 25 years later, the hub I bought during college still makes a great network tap. It was only recently that I needed something a little more powerful.

Hubs date back to the early years of Ethernet when twisted-pair cabling started being used for networking (like Cat-3/Cat-5). These networks initially ran at only 10 Mb/s and early hubs were also limited to that throughput. As technology advanced, Ethernet speeds increased to 100 Mb/s and new Ethernet switches were created. Unlike hubs, switches only forward packets to the port needed for the packet to reach its intended destination. This was done because hubs can suffer from "collisions" that occur when more than one device tries to transmit at the same time. Switches eliminate packet collisions and allow networks to remain efficient as the number of networked devices grow. Modern switches also support 10/100 Mbit/s and gigabit (1,000 Mbit/s) throughputs. While this is great for network performance, most inexpensive switches can't be used as a network tap.

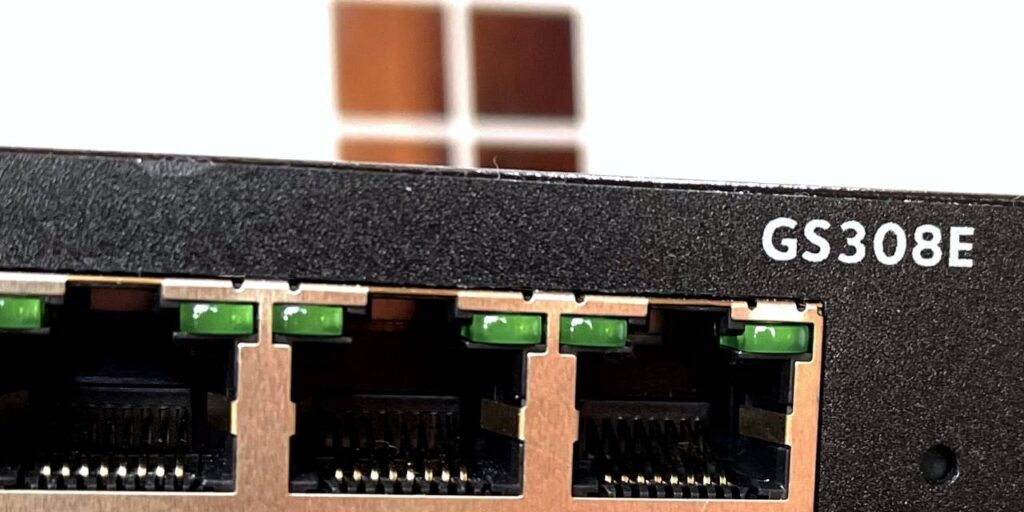

So, what can you do when you need to monitor a highspeed gigabit link and can't afford an expensive network tap? How about the $39.99 10/100/1000 8-port Netgear GS308E switch with "Enhanced Features". As you probably guessed, one of those enhanced features, called Port Mirroring, allows this switch to be used as a network tap. And unlike a hub, port mirroring allows you to monitor another port without it also monitoring you.

Follow the instructions below to configure a high-speed (up to gigabit) network tap using the Netgear GS308E switch.

Physical connections:

Port 1 – Device (or Network Segment) Being Monitored

Port 2 – Sniffer (My Laptop)

Port 8 – Uplink to Network (optional)

A screenshot of a computer Description automatically generated.

That's all there is to it! Make sure your devices are connected to the proper ports and start your network analysis.

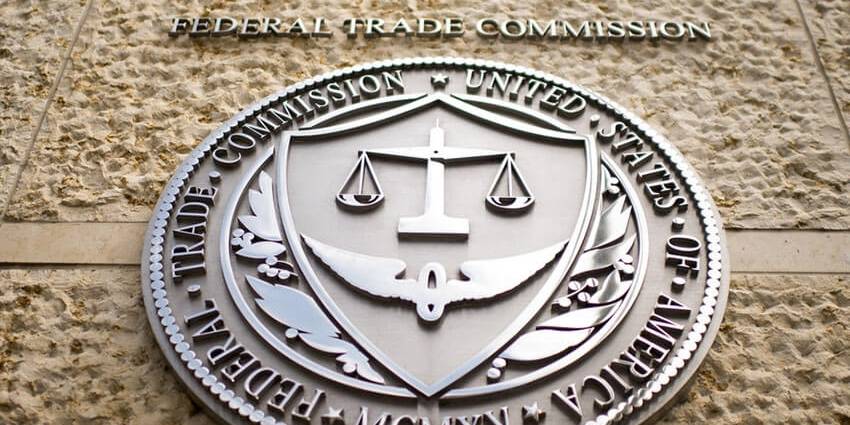

Back in 1999, the Gramm-Leach-Bliley Act was passed in the United States. Its main purpose was to allow banks to offer services that previously were forbidden by laws passed even farther back in 1933. In doing so, the scope of these new rules surrounding these services not only applied to banks, but also to any organization that offered them.

A primary component of this Act, Section 501, requires the protection of non-public personal information. It states, "...each financial institution has an affirmative and continuing obligation to respect the privacy of its customers and to protect the security and confidentiality of those customers' nonpublic personal information."

Privacy, Security, Confidentiality. Could you identify a hot topic in information security today that doesn't involve one or all three of those areas? Couple that intense interest with the changes in technology that have occurred over the past 20 years and you can understand why amendments to the GLBA were needed.

The main rule we will discuss here is the Standards for Safeguarding Customer Information, commonly called the Safeguards Rule. Originally published in 2001, this rule was just amended (January 10, 2022) and some of the most important provisions became effective on December 9, 2022. The overlying goal of this rule is the requirement to have, "the administrative, technical, or physical safeguards you use to access, collect, distribute, process, protect, store, use, transmit, dispose of, or otherwise handle customer information."

You can't duck the issue based on size. Nearly all rules apply except for some new elements which apply to entities that maintain fewer than 5,000 consumer records. The most important qualifier is:

If either of these are true, then the GLBA rules apply to you.

What is a financial institution according to the GLBA? The exact definition is, "any institution the business of which is engaging in an activity that is financial in nature or incidental to such financial activities as described in section 4(k) of the Bank Holding Company Act of 1956, 12 U.S.C. 1843(k)."In case you don't have the Bank Holding Company Act handy, here is a list of examples of financial institutions that the GLBA applies to, as noted in 16 CFR 314.2(h)(2)(iv):

These are just some examples, and this list is not all inclusive. Note that simply letting someone run a tab or accepting payments in the form of a credit card that was not issued by the seller does not make an entity a financial institution.

At the heart of the Safeguards Rule are a number of key elements involving the development, maintenance, and enforcement of a written information security plan (ISP). The keys aspects and notable amendments:

Covered financial institutions should be in compliance with the non-amended components of the Safeguards Rule already, since the formal effective date of the rule was January 10, 2022. The FTC has allowed for an effective date of December 9, 2022, for the amended provisions due to the length of time required to implement them.

Besides the potential costs associated with breaches, successful malware attacks, ransomware, and the like, there are penalties that can be assessed by the FTC for non-compliance. These penalties can apply to the enterprise and/or individuals responsible for compliance as follows:

So, if this does apply to you and your organization, hopefully you are already compliant and none of this was a surprise to you. If this doesn't apply to you, I commend you for reading on. And if it applies and you are completely surprised by the requirements and amendments, the clock is ticking! Contact SynerComm for compliance support.